Friday, 26 April 2024

A fast fileserver with FreeBSD, NVMEs, ZFS and NFS

I have a small server running in my flat that serves files locally via NFS and remotely via Nextcloud. This post documents the slightly overpowered upgrade of the hardware and subsequent performance / efficiency optimisations.

TL;DR

- I can fully saturate a 10Gbit LAN connection, achieving more than 1100 MiB/s throughput.

- I can perform a

zpool scrubwith 11 GiB/s, completing a 6.8TiB scrub in 11min. - Idle power usage can be brought down to 34W.

Old setup and requirements

What the server does:

- Serve files via NFS to

- my workstation (high traffic)

- a couple of Laptops (low traffic)

- the TV running Kodi (medium traffic)

- Host a Nextcloud which provides file storage, PIM etc. for a handful of people

Not a lot of compute is necessary, and I have tried to keep power usage low. The old hardware served me well really long:

- AMD 630 CPU

- 16GiB RAM

- 2+1 * 4TiB spinning disk RAIDZ1 with SSD ZIL (“write-cache”)

The main pain point was slow disk access resulting in poor performance when large files were read by the Nextcloud. Browsing through my photo collection via NFS was also very slow, because thumbnail generation needed to pull all the images. Furthermore, low speed meant that I was not doing as much on the remote storage as I would have liked (e.g. storing games), resulting in my workstation’s storage always running out. And I was just reaching the limits of my ZFS pool anyway, so it was time for an upgrade!

New setup

To get better I/O, I thought about switching from HDD to SSD, but then realised that SSD performance is very low compared to NVME performance, although the price difference is not that much. Also, NFS+ZFS leads to quite a bit of I/O, typically requiring the use of faster caching devices, further complicating the setup. Consequently, I decided to go for a pure NVME setup. Of course, the new server would also need 10GBit networking, so that I can use all that speed in the LAN!

This is the new hardware! I will discuss the details below.

Mainboard, CPU and RAM

The main requirement for the mainboard is to offer connectivity for four NVME disks. And to be prepared for the future, I would actually like 1-2 extra NVME slots. There are two ways to attach NVMEs to a motherboard:

- directly (“natively”)

- via an extension card that is plugged into a PCIexpress slot

Initially, I had assumed no mainboard would offer sufficient native slots, so I did a lot of research on option 2. The summery: it is quite messy. If you want to use a single extension card that hosts multiple NVMEs (which is required in this case), you need so called “bifurcation support” on the mainboard. This lets you e.g. put a PCIe x8 card with two NVME 4x disks into a PCIe 8x slot on the mainboard. However, this feature is really poorly documented,1 and and varies between mainboard AND CPU whether they support no bifurcation, only 8x → 4x4x or also 16x → 4x4x4x4x. The different PCIe versions and speeds, and the difference between the actually supported speed and the electrical interface add further complications.

In the end, I decided to not do any experiments and look for a board that natively supports a high number of NVME slots. For some reasons, this feature is very rare on AMD mainboards, so I switched to Intel (although actually I am a bit of an AMD fanboy). I probably could have gone with a board that has 5 slots, but I use hardware for a long time and wanted to be safe, so I took board that has 6 NVME slots (2 free slots):

None of the available boards had a proper2 10GBit network adaptor, so having a usable PCIe slot for a dedicated card was also a requirement. It is important to check whether PCIe slots can still be used when all NVME slots are occupied; sometimes they internally share the bandwidth. But for the above board this is not the case.

Important: To be able to boot FreeBSD on this board, you need to add the following to /boot/device.hints:

hint.uart.0.disabled="1"

hint.uart.1.disabled="1"

For the CPU, I just went with something on the low TDP end of the current Intel CPU range, the Intel Core i3-12100T. Four cores + four threads was exactly what I was looking for, and 35W TDP sounded good. I paired that with some off-the-shelf 32GiB RAM kit.

Case, power supply & cooling

Strictly speaking a 2U case would have been sufficient, but I thought a 3U case might offer better air circulation. I ended up with the Gembird 19CC-3U-01. For unknown reasons, I chose a 2U horizontal CPU fan, instead of a 3U one. The latter would definitely have provided better airflow, but since the fan barely runs at all, it doesn’t make much of a difference.

I was unsuccessful in finding a good PSU that is super efficient in the average case of around 40W power usage but also covers spikes well above 100W, so I just chose the cheapest 300W one I could get :)

The case with everything in place.

The built in fans are very noisy. I chose to replace one of the intake fans with a spare one I had lying around and only connect one of the rear outtake fans. But I added an extra fan where the extension slots are to divert some airflow around the NIC—which otherwise gets quite warm. This should also blow some air over the NVME heatsinks! All fans can be regulated and fine-tuned from the BIOS of the mainboard which I totally recommend you do. At the current temperatures and average workloads the whole setup is almost silent.

Storage

Now, the fun begins. Since I needed more space than before, I clearly want a 3+1 x 4TiB RAIDZ1.

My goal was to be able to saturate a 10GBit connection (so get around 1GiB/s throughput) and still have the server be able to serve the Nextcloud without slowing down significantly. Currently the WAN upload is quite slow, but I hope to have fibre in the future. In any case, I thought that any modern NVME should be fast enough, because they all advertise speeds of multiple GiB/s.

Choice of disks

Anyway, I got two Crucial P3 Plus 4TB (which were on sale at Amazon for ~190€), as well as two Lexar NM790 4TB (which were also a lot cheaper than they are now). My assumption that that they were very comparable, was very wrong:

| Disk | IOPS rand-read | IOPS read | IOPS write | MB/s read | MB/s write | “cat speed” MB/s |

|---|---|---|---|---|---|---|

| Crucial | 53,500 | 794,000 | 455,000 | 2,600 | 4,983 | ~700 |

| Lexar | 53,700 | 796,000 | 456,000 | 4,578 | 5,737 | ~2,700 |

I used this fellow’s fio-script to

generate all columns except the last. The last column was generated by simply cat’ing a 10GiB file of random numbers to /dev/null which

roughly corresponds to the read portion of copying a 4k movie file.

Since I had two disks each, I actually took the time to test all of them in different mainboard slots, but the results

were very consistent: in real-life tasks, the Crucial disk underperformed significantly, while the Lexar disks were

super fast.

I decided to return the Crucial disks and get two more by Lexar 😎

Disk encryption

I always store my data encrypted at rest. FreeBSD offers GELI block-level encryption (similar to LUKS on Linux). But OpenZFS also provides a dataset/filesystem-level encryption since a while. I previously used GELI, but I wanted to switch to ZFS native encryption, because it provides some advantages:

- Flexibility: I can choose later which datasets to encrypt; I can encrypt different datasets with different keys.

- Zero-knowledge backups: I can send incremental backups off-site that are received and fully integrated into the target pool without that server ever getting the decryption keys.

- Forward-compatibility: I can upgrade to better encryption algorithms later.

- Linux-compatibility: I can import the existing pool in a Linux environment for debugging or benchmarking.

However, I had also heard that ZFS native encryption was slower, so I decided to do some benchmarks:

| Disk | IOPS rand-read | IOPS read | IOPS write | MB/s read | MB/s write | “cat speed” MB/s |

|---|---|---|---|---|---|---|

| no encryption | 54,700 | 809,000 | 453,000 | 4,796 | 5,868 | 2,732 |

| geli-aes-256-xts | 40,000 | 793,000 | 446,000 | 3,332 | 3,334 | 952 |

| zfs-enc-aes-256-gcm | 26,100 | 513,000 | 285,000 | 3,871 | 4,648 | 2,638 |

| zfs-enc-aes-128-gcm | 29,300 | 532,000 | 353,000 | 3,971 | 4,794 | 2,631 |

Interestingly, GELI3 performs much better on the IOPS, but much worse on throughput, especially on our real-life test case. Maybe some smart person knows the reason for this, but I took this benchmark as an assurance that going with native encryption was the right choice.4 One reason for the good performance of the native encryption seems to be that it makes use of the CPU’s avx2 extensions.

At this point, I feel like I do need to warn people about some ZFS encryption related issues that I learned about later. Please read this. I have had no problems to date, but make up your own mind.

RaidZ1

| recordsize | compr. | encrypt | IOPS rand-read | IOPS read | IOPS write | MB/s read | MB/s write | “cat speed” MB/s |

|---|---|---|---|---|---|---|---|---|

| 128 KiB | off | off | 50,000 | 869,000 | 418,000 | 3,964 | 5,745 | 2,019 |

| 128 KiB | on | off | 49,800 | 877,000 | 458,000 | 3,929 | 4,654 | 1,448 |

| 128 KiB | off | aes128 | 26,300 | 484,000 | 230,000 | 3,589 | 5,331 | 2,142 |

| 128 KiB | on | aes128 | 27,400 | 501,000 | 228,000 | 3,510 | 3,927 | 2,120 |

These are the numbers after creation of the RAIDZ1 based pool. They are quite similar to the numbers measured before.

The impact of encryption on IOPS is clearly visible, less so on sequential read/write throughput.

Compression seems to impact write throughput but not read throughput which is expected for zstd. It is unclear why

“cat speed” is lower here.

| recordsize | compr. | encrypt | IOPS rand-read | IOPS read | IOPS write | MB/s read | MB/s write | “cat speed” MB/s |

|---|---|---|---|---|---|---|---|---|

| 1 MiB | off | off | 7,235 | 730,000 | 404,000 | 3,686 | 3,548 | 2,142 |

| 1 MiB | on | off | 7,112 | 800,000 | 470,000 | 3,624 | 3,447 | 2,064 |

| 1 MiB | off | aes128 | 3,259 | 497,000 | 258,000 | 3,029 | 3,422 | 2,227 |

| 1 MiB | on | aes128 | 3,697 | 506,000 | 249,000 | 3,137 | 3,361 | 2,237 |

Many optimisation guides suggest setting the zfs recordsize to 1 MiB for most use-cases, especially storage of media

files.

But this seems to drastically penalise random read IOPS while providing little to no benefit in the sequential

read/write scenarios. This is actually a bit surprising and I will need to investigate this more.

Is it perhaps because NVMEs are good at parallel access and therefor suffer less from fragmentation anyway?

In any case, the main take away message is that overall read and write throughputs are over 3,000 MiB/s in the synthetic case and over 2,000 MiB/s in the manual case, which is great.

Other disk performance metrics

| Operation | Speed [MiB/s] |

|---|---|

| Copying 382 GiB between two datasets (both enc+comp) | 1,564 |

| Copying 505 GiB between two datasets (both enc+comp) | 800 |

zfs scrub of the full pool |

11,000 |

These numbers further illustrate some real world use-cases. It’s interesting to see the difference between the first two, but it’s also important to keep in mind that this is reading and writing at the same time. Maybe some internal caches are exhausted after a while? I didn’t debug these numbers further, but I think the speed is quite good after such a long read/write.

More interesting is the speed for scrubbing, and, yes, I have checked this a couple of times. A scrub of 6.84TiB happens in 10m - 11m, which is pretty amazing, I think, considering that it is reading the data and calculating checksums. I am assuming that sequential read is just very fast and that access to the different disks happens in parallel. The checksum implementation is apparently also avx2 optimised.

LAN

Network adapter

Based on recommendations, I decided to buy an Intel card. Cheaper 10GBit network cards are available from Marvell/Aquantia, but the driver support in FreeBSD is poor, and the performance is supposedly also not close to that of Intel.

Many people suggested I go for SFP+ (fibre) instead of 10GBase-T (copper), but I already have CAT7 cables in my flat. While I could have used fibre purely for connecting the server to the switch (and this would likely save some power), I would have had to buy a new switch and the options were just not economical—I already have a switch with two 10GBase-T ports which I had bought for exactly this setup.

The cheapest Intel 10GBase-T card out there is the X540 which is quite old and available on Amazon for around 80€. I bought two of those (one for the server and one for the workstation). More modern cards are supposedly more energy efficient, but also a lot more expensive.5

NFS Performance

On server and client, I set:

kern.ipc.maxsockbuf=4737024in/etc/sysctl.confmtu 9000 media 10gbase-tin the/etc/rc.conf(ifconfig)

Only on the server:

nfs_server_maxio="1048576"in/etc/rc.conf

Only on the client:

nfsv4,nconnect=8,readahead=8as the mount options for the nfs mount.vfs.maxbcachebuf=1048576in/boot/loader.conf(not sure any more if this makes a difference).

These settings allow larger buffers and increase the amount of readahead. This favours large sequential reads/writes over small random reads/writes.

The full options on the client end up being:

# nfsstat -m

X.Y.Z.W:/ on /mnt/server

nfsv4,minorversion=2,tcp,resvport,nconnect=8,hard,cto,sec=sys,acdirmin=3,acdirmax=60,acregmin=5,acregmax=60,nametimeo=60,negnametimeo=60,rsize=1048576,wsize=1048576,readdirsize=1048576,readahead=8,wcommitsize=16777216,timeout=120,retrans=2147483647

I use NFS4 for my workstation and NFS3 for everyone else. I have performed no benchmarks on NFS3, but I see no reason why it would be slower.

| IOPS rand-read | IOPS read | IOPS write | MB/s read | MB/s write | “cat speed” MB/s |

|---|---|---|---|---|---|

| 283 | 292,000 | 33,200 | 1,156 | 594 | 1,164 |

This benchmark was performed on a dataset with 1M recordsize, encryption, but no compression.

Random read IOPS are pretty bad, and I see a strong correlation here to the rsize (if I halve it, I double the IOPS; not shown in table).

It’s possible that every 4KiB read actually triggers a 1MiB read in NFS which would explain this.

On the other hand, the sequential read and write performance is pretty good with synthetic and real world read speeds

being very close to the theoretical maximum of the 10GBit connection.

One thing to keep in mind: The blocksize when reading has a very strong impact on the performance. This

can be seen when using dd with different bs arguments. Of course, 1MiB is optimal if that is also used by NFS, and

cat seems to do this. However, cp does not which results in a much slower performance than if using dd if=.. of=.. bs=1M.

I have done measurements with plain nc over the wire (also reaching 1,160 MiB/s) and iperf3 which achieves 1,233 MiB/s just below the 1,250 MiB/s equivalent of 10Gbit.

Power consumption and thermals

For a computer running 24/7 in my flat, power consumption is of course important. I bought a device to measure power consumption at the outlet to get an accurate picture.

idle

Because the computer is idle most of the time, optimising idle power usage is most important.

| Change | W/h |

|---|---|

| default | 50 |

*_cx_lowest="Cmax" |

45 |

| disable WiFi and BT | 42 |

media 10gbase-t |

45 |

machdep.hwpstate_pkg_ctrl=0 |

41 |

| turn on chassis fans | 42 |

| ASPM modes to L0s+L1 / enabled | 34 |

I assume that the same setup on Linux would be slightly more efficient, but 34W in idle is acceptable.

Clearly, the most impactful changes were:

- Activating ASPM for the PCIe devices in the BIOS.

- Adding

performance_cx_lowest="Cmax"andeconomy_cx_lowest="Cmax"to/etc/rc.conf. - Adding

machdep.hwpstate_pkg_ctrl=0to/boot/loader.conf.

You can find online resources on what these options do. You might need to update the BIOS to be able to disable

WiFi and Bluetooth devices completely. You can also use hints in the /boot/device.hints, but this doesn’t save

as much power.

Using 10GBase-T speed on the network device (instead of 1000Base-T) unfortunately increases power usage notably, but there is nothing I could find to mitigate this.

Things that are often recommended but that did not help me (at least not in idle):

- NVME power states (more on this below)

- lower values for

sysctl dev.hwpstate_intel.*.epp(more on this below) hw.pci.do_power_nodriver=3

| idle temperatures | °C |

|---|---|

| CPU | 37-40 |

| NVMEs | 52-55 |

The latter was particularly interesting, because I had heard that newer NVMEs, and especially those by Lexar get very warm. It should be noted though, that the mainboard comes with a large heatsink that covers all NVMEs.

under load

The only “load test” that I performed was a scrub of the pool. Since this puts stress on the NVMEs and also the CPUs, it should be at least indicative of how things are going.

during zpool scrub |

°C |

|---|---|

| CPU | 55-59 |

| NVMEs | 69-75 |

The power usage fluctuates between 85W and 98W. I think all of these values are acceptable.

| NVME power state hint | scrub speed GiB/s | W/h |

|---|---|---|

| 0 (default) | 11 | < 100 |

| 1 | 8 | < 93 |

| 2 | 4 | < 70 |

You can use nvmecontrol to tell the NVME disks to save energy. More information on this here

and here.

I was surprised that all of this works reliably on FreeBSD, but it does! The man-page is not great though. Simply

call nvmecontrol power -p X nvmeYns1 to set the hint to X on device Y, if desired. Note that this needs to be repeated after

every reboot.

dev.hwpstate_intel.*.epp |

scrub speed GiB/s | W/h |

|---|---|---|

| 50 (default) | 11.0 | < 100 |

| 100 | 3.3 | < 60 |

You can use the dev.hwpstate_intel.*.epp sysctls for you cores to tune the eagerness of that core to scale up with

higher number meaning less eagerness.

In the end, I decided not to apply any of these “under load optimisations”. It is just very difficult, because, as shown, all optimisations that reduce watts per time also increase time. I am not certain of any good ways to quantify this, but it feels like keeping the system at 70W for 30min instead of 100W for 10min, is not really worth it. And I kind of also want the system to be fast, that’s why I spent so much money on it 🙃

The CPU does have a cTDP mode that can be activated via the BIOS and which is “worth it”, according to some articles I have read. I might give this a try in the future.

Final remarks

What a ride! I spent a lot of time optimising and benchmarking this and I am quite happy with the outcome. I am able to exhaust the 10GBit LAN connection completely, and still have resources left on the server :)

Thanks to the people at www.bsdforen.de who had quite a few helpful suggestions.

If you see anything that I missed, or have suggestions on how to improve this setup, let me know in the comments!

Footnotes

-

With ASUS being the only exception. ↩︎

-

Proper in this context means well-supported by FreeBSD and with a good performance. Usually, that means an Intel NIC. Unfortunately all the modern boards come Marvell/Aquantia AQtion adaptors which are not well-supported by FreeBSD. ↩︎

-

The geli device was created with:

geli init -b -s4096 -l256↩︎ -

I wanted to perform all these tests with Linux as well, but I ran out of time 🙈 ↩︎

-

I did try a slightly more more modern adapter with Intel 82599EN chip. This is a SFP+ chip, but I found an adaptor with built-in 10GBase-T for around 150€. It ended up having some driver issues (you needed to plug and unplug the CAT cable for the device to go UP), and it used more energy than the X540, so I sent it back. ↩︎

Tuesday, 23 April 2024

Meta Horizon OS, Replicant and the GPL

Meta Horizon OS is a variant of Android, which includes GPLed parts, including the kernel Linux. Replicant is a fully free variant of Android that can run on smartphones and other kinds of devices. As the maintainer of libsurvive I have been working on a port to Android/Replicant which is known to work well with the Rockchip RK3399.

A few weeks ago, I asked for the source code for the Oclulus-Linux kernel, but until now the Meta Quest does not comply with the GPL. So I plan to make my own device running Replicant and maybe Guix codenamed the Replica Quest. The Replica Quest will include a user interface called LibreVR Mobile and on my POWER9 system I use LibreVR Desktop. Any part that Meta releases as free software can be integrated into Replicant. Non-free parts need to be replaced, that will be hard work.

Sunday, 21 April 2024

IWP9

Like last year, I attended the 10th International Workshop on Plan 9 remotely, presenting a talk about porting Inferno to various Cortex-M microcontrollers.

The presentation covers things that I mentioned in my previous post, so I won't go into details. My diary entry has links to the video and materials.

Categories: Inferno, Free Software

KDE Gear 24.05 branches created

Make sure you commit anything you want to end up in the KDE Gear 24.05

releases to them

Next Dates

- April 25 2024: 24.05 Freeze and Beta (24.04.80) tag & release

- May 9, 2024: 24.05 RC (24.04.90) Tagging and Release

- May 16, 2024: 24.05 Tagging

- May 23, 2024: 24.05 Release

https://community.kde.org/Schedules/KDE_Gear_24.05_Schedule

Saturday, 20 April 2024

Protokolo

On-and-off over the past few months I wrote a new tool called Protokolo. I wrote earlier about how I implemented internationalisation for this project. This blog post is a simple and short introduction to the tool.

Protokolo—Esperanto for ‘report’ or ‘minutes’—is a change log generator. It solves a very simple (albeit annoying) problem space at the intersection of change logs and version control systems:

- Different merge requests all edit the same area in CHANGELOG, inevitably resulting in merge conflicts.

- If a section for an unreleased version does not yet exist in the main branch’s CHANGELOG (typically shortly after release), feature branches must create this section. If multiple feature branches do this, you get more merge conflicts.

- Old merge requests, when merged, sometimes add their change log entry to the section of a release that is already published.

Protokolo gets rid of the above problems by having the user create a separate file for each change log fragment (think: one new file per merge request). Then, just before release, all the files get concatenated into a new section in CHANGELOG.

This idea is not exactly new. Towncrier does the same thing. Protokolo is only different in some of the implementation details.

The documentation of Protokolo is—I think—excellent and comprehensive. To prevent myself from repeating things in this blog post, I recommend reading the documentation for a usage guide.

Barring some improvements I want to do to Click and internationalisation, I think the project is finished, insofar as software projects are ever finished. And that’s pretty cool! I should finish software projects more often.

Sunday, 14 April 2024

How to set up Python internationalisation with Click, Poetry, Forgejo, and Weblate

TL;DR—look at Protokolo and do exactly what it does.

This is a short article because I am lazy but do want to be helpful. The sections are the steps you should take. All code presented in this article is licensed CC0-1.0.

Use gettext

As a first step, you should use

gettext. This effectively

means wrapping all string literals in _() calls. This article won’t waste a

lot of time on how to do this or how gettext works. Just make sure to get

plurals right, and make sure to provide translator comments where necessary.

I recommend using the class-based API. In your module, create the following file

i18n.py.

import gettext as _gettext_module

import os

_PACKAGE_PATH = os.path.dirname(__file__)

_LOCALE_DIR = os.path.join(_PACKAGE_PATH, "locale")

TRANSLATIONS = _gettext_module.translation(

"your-module", localedir=_LOCALE_DIR, fallback=True

)

_ = TRANSLATIONS.gettext

gettext = TRANSLATIONS.gettext

ngettext = TRANSLATIONS.ngettext

pgettext = TRANSLATIONS.pgettext

npgettext = TRANSLATIONS.npgettext

This assumes that your compiled .mo files will live in

your-module/locale/<lang>/LC_MESSAGES/your-module.mo. We’ll take care of that

later. Putting the compiled files there isn’t ideal (you want them in

/usr/share/locale), but it’s the best you can do with Python packaging.

In subsequent files, just do the following to translate strings:

from .i18n import _

# TRANSLATORS: translator comment goes here.

print(_("Hello, world!"))

However, the Click module doesn’t use our TRANSLATIONS object. To fix this, we

need to use the GNU gettext API. This is kind of dirty, because it messes with

the global state, so let’s do it in cli.py (the file which contains all your

Click groups and commands).

if gettext.find("your-module", localedir=_LOCALE_DIR):

gettext.bindtextdomain("your-module", _LOCALE_DIR)

gettext.textdomain("your-module")

Internationalise Click

When using Click, you have two challenges:

- You need to translate the help docstrings of your groups and commands.

- You need to translate the Click gettext strings.

Translating docstrings

Normally, you have some code like this:

@click.group(name="your-module")

def main():

"""Help text goes here."""

...

And when you run your-module --help, you get the following output:

$ your-module --help

Usage: your-module [OPTIONS] COMMAND [ARGS]...

Help text goes here.

Options:

--help Show this message and exit.

You cannot wrap the docstring in a _() call. So by necessity, we will need to

remove the docstring and do something like this:

_MAIN_HELP = _("Help text goes here.")

@click.group(name="your-module", help=_MAIN_HELP)

def main():

...

For multiple paragraphs, I translate each paragraph separately, which is easier for the translators:

_HELP_TEXT = (

_("Help text goes here.")

+ "\n\n"

+ _(

"Longer help paragraph goes here. We use implicit string concatenation"

" to avoid putting newlines in the translated text."

)

)

Translate the Click gettext strings

We will create a script generate_pot.sh that generates our .pot file,

including the Click translations. My script-fu isn’t very good, but it appears

to work.

#!/usr/bin/env sh

# Set VIRTUAL_ENV if one does not exist.

if [ -z "${VIRTUAL_ENV}" ]; then

VIRTUAL_ENV=$(poetry env info --path)

fi

# Get all the translation strings from the source.

xgettext --add-comments --from-code=utf-8 --output=po/your-module.pot src/**/*.py

xgettext --add-comments --output=po/click.pot "${VIRTUAL_ENV}"/lib/python*/*-packages/click/**.py

# Put everything in your-module.pot.

msgcat --output=po/your-module.pot po/your-module.pot po/click.pot

# Update the .po files. Ideally this should be done by Weblate, but it appears

# that it isn't.

for name in po/*.po

do

msgmerge --output="${name}" "${name}" po/your-module.pot;

done

After running this script, all strings that must be translated are in your

.pot and existing .po files.

You can use the above script for argparse as well, with minor modifications.

Generate .pot file automagically

You don’t want to manually run the generate_pot.sh script. Instead, you want

the CI (Forgejo Actions) to run it on your behalf whenever a gettext string is

changed or introduced.

Use the following .forgejo/workflows/gettext.yaml file.

name: Update .pot file

on:

push:

branches:

- main

# Only run this job when a Python source file is edited. Not strictly

# needed.

paths:

- "src/your-module/**.py"

jobs:

create-pot:

runs-on: docker

container: nikolaik/python-nodejs:python3.11-nodejs21

steps:

- uses: actions/checkout@v3

- name: Install gettext

run: |

apt-get update

apt-get install -y gettext

# We mostly install your-module to install the click dependency.

- name: Install your-module

run: poetry install --no-interaction --only main

- name: Install wlc

run: pip install wlc

- name: Lock Weblate

run: |

wlc --url https://hosted.weblate.org/api/ --key ${{secrets.WEBLATE_KEY }} lock your-project/your-module

- name: Push changes from Weblate to upstream repository

run: |

wlc --url https://hosted.weblate.org/api/ --key ${{secrets.WEBLATE_KEY }} push your-project/your-module

- name: Pull Weblate translations

run: git pull origin main

- name: Create .pot file

run: ./generate_pot.sh

# Normally, POT-Creation-Date changes in two locations. Check if the diff

# includes more than just those two lines.

- name: Check if sufficient lines were changed

id: diff

run:

echo "changed=$(git diff -U0 | grep '^[+|-][^+|-]' | grep -Ev

'^[+-]"POT-Creation-Date' | wc -l)" >> $GITHUB_OUTPUT

- name: Commit and push updated your-module.pot

if: ${{ steps.diff.outputs.changed != '0' }}

run: |

git config --global user.name "your-module-bot"

git config --global user.email "<>"

git add po/your-module.pot po/*.po

git commit -m "Update your-module.pot"

git push origin main

wlc --url https://hosted.weblate.org/api/ --key ${{ secrets.WEBLATE_KEY }} pull your-project/your-module

wlc --url https://hosted.weblate.org/api/ --key ${{ secrets.WEBLATE_KEY }} unlock your-project/your-module

The job is fairly self-explanatory. The wlc command talks with Weblate, which

we will set up soon. The job installs dependencies, gets the latest

translations from Weblate, generates the .pot, and then pushes the generated

.pot (and .po files) if there were changed strings.

See

reuse-tool

for a GitHub Actions job. It is currently missing the wlc locking.

Set up Weblate

Create your project in Weblate. In the VCS

settings, set version control system to ‘Git’. Set your source repository and

branch correctly. Set the push URL to

https://<your-token>@codeberg.org/your-name/your-module.git. You get the token

from https://codeberg.org/user/settings/applications. You will need to give

the token access to ‘repository’. There should be a more granular way of doing

this, but I am not aware of it.

Set the repository browser to

https://codeberg.org/your-name/your-module/src/branch/{{branch}}/{{filename}}#{{line}}.

Turn ‘Push on commit’ on, and set merge style to ‘rebase’. Also, always lock on

error.

In your project settings on Weblate, generate a project API token. Then in your

Forgejo Actions settings, create a secret named WEBLATE_KEY with the project

API token as value.

Publishing your translations with Poetry

Now that all the translation plumbing is working, you just need to make sure

that you generate your .mo files when building/publishing with Poetry.

We add a build step to Poetry using the undocumented build

script. Add the following to your pyproject.toml:

[tool.poetry.build]

generate-setup-file = false

script = "_build.py"

Do NOT name your file build.py. It will break Arch Linux

packaging.

Create the file _build.py. Here are the contents:

import glob

import logging

import os

import shutil

import subprocess

from pathlib import Path

_LOGGER = logging.getLogger(__name__)

ROOT_DIR = Path(os.path.dirname(__file__))

BUILD_DIR = ROOT_DIR / "build"

PO_DIR = ROOT_DIR / "po"

def mkdir_p(path):

"""Make directory and its parents."""

Path(path).mkdir(parents=True, exist_ok=True)

def rm_fr(path):

"""Force-remove directory."""

path = Path(path)

if path.exists():

shutil.rmtree(path)

def main():

"""Compile .mo files and move them into src directory."""

rm_fr(BUILD_DIR)

mkdir_p(BUILD_DIR)

msgfmt = None

for executable in ["msgfmt", "msgfmt.py", "msgfmt3.py"]:

msgfmt = shutil.which(executable)

if msgfmt:

break

if msgfmt:

po_files = glob.glob(f"{PO_DIR}/*.po")

mo_files = []

# Compile

for po_file in po_files:

_LOGGER.info(f"compiling {po_file}")

lang_dir = (

BUILD_DIR

/ "your-module/locale"

/ Path(po_file).stem

/ "LC_MESSAGES"

)

mkdir_p(lang_dir)

destination = Path(lang_dir) / "your-module.mo"

subprocess.run(

[

msgfmt,

"-o",

str(destination),

str(po_file),

],

check=True,

)

mo_files.append(destination)

# Move compiled files into src

rm_fr(ROOT_DIR / "src/your-module/locale")

for mo_file in mo_files:

relative = (

ROOT_DIR / Path("src") / os.path.relpath(mo_file, BUILD_DIR)

)

_LOGGER.info(f"copying {mo_file} to {relative}")

mkdir_p(relative.parent)

shutil.copyfile(mo_file, relative)

if __name__ == "__main__":

main()

It is probably a little over-engineered (building into build/ and then

consequently copying to src/your-module/locale is unnecessary), but it works.

Finally, make sure to actually include *.mo files in pyproject.toml:

include = [

{ path = "src/your-module/locale/**/*.mo", format="wheel" }

]

And that’s it! A rather dense and curt blog post, but it should contain helpful bits and pieces.

Sunday, 07 April 2024

Some More Slow Progress

A couple of months have elapsed since my last, brief progress report on L4Re development, so I suppose a few words are required to summarise what I have done since. Distractions, travel, and other commitments notwithstanding, I did manage to push my software framework along a little, encountering frustrations and the occasional sensation of satisfaction along the way.

Supporting Real Hardware

Previously, I had managed to create a simple shell-like environment running within L4Re that could inspect an ext2-compatible filesystem, launch programs, and have those programs communicate with the shell – or each other – using pipes. Since I had also been updating my hardware support framework for L4Re on MIPS-based devices, I thought that it was time to face up to implementing support for memory cards – specifically, SD and microSD cards – so that I could access filesystems residing on such cards.

Although I had designed my software framework with things like disks and memory devices in mind, I had been apprehensive about actually tackling driver development for such devices, as well as about whether my conceptual model would prove too simple, necessitating more framework development just to achieve the apparently simple task of reading files. It turned out that the act of reading data, even when almost magical mechanisms like direct memory access (DMA) are used, is as straightforward as one could reasonably expect. I haven’t tested writing data yet, mostly because I am not that brave, but it should be essentially as straightforward as reading.

What was annoying and rather overcomplicated, however, was the way that memory cards have to be coaxed into cooperation, with the SD-related standards featuring layer upon layer of commands added every time they enhanced the technologies. Plenty of time was spent (or wasted) trying to get these commands to behave and to allow me to gradually approach the step where data would actually be transferred. In contrast, setting up DMA transactions was comparatively easy, particularly using my interactive hardware experimentation environment.

There were some memorable traps encountered in the exercise. One involved making sure that the interrupts signalling completed DMA transactions were delivered to the right thread. In L4Re, hardware interrupts are delivered via IRQ (interrupt request) objects to specific threads, and it is obviously important to make sure that a thread waiting for notifications (including interrupts) expects these notifications. Otherwise, they may cause a degree of confusion, which is what happened when a thread serving “blocks” of data to the filesystem components was presented with DMA interrupt occurrences. Obviously, the solution was to be more careful and to “bind” the interrupts to the thread interacting with the hardware.

Another trap involved the follow-on task of running programs that had been read from the memory card. In principle, this should have yielded few surprises: my testing environment involves QEMU and raw filesystem data being accessed in memory, and program execution was already working fine there. However, various odd exceptions were occurring when programs were starting up, forcing me to exercise the useful kernel debugging tool provided with the Fiasco.OC (or L4Re) microkernel.

Of course, the completely foreseeable problem involved caching: data loaded from the memory card was not yet available in the processor’s instruction cache, and so the processor was running code (or potentially something that might not have been code) that had been present in the cache. The problem tended to arise after a jump or branch in the code, executing instructions that did undesirable things to the values of the registers until something severe enough caused an exception. The solution, of course, was to make sure that the instruction cache was synchronised with the data cache containing the newly read data using the l4_cache_coherent function.

Replacing the C Library

With that, I could replicate my shell environment on “real hardware” which was fairly gratifying. But this only led to the next challenge: that of integrating my filesystem framework into programs in a more natural way. Until now, accessing files involved a special “filesystem client” library that largely mimics the normal C library functions for such activities, but the intention has always been to wrap these with the actual C library functions so that portable programs can be run. Ideally, there would be a way of getting the L4Re C library – an adapted version of uClibc – to use these client library functions.

A remarkable five years have passed since I last considered such matters. Back then, my investigations indicated that getting the L4Re library to interface to the filesystem framework might be an involved and cumbersome exercise due to the way the “backend” functionality is implemented. It seemed that the L4Re mechanism for using different kinds of filesystems involved programs dynamically linking to libraries that would perform the access operations on the filesystem, but I could not find much documentation for this framework, and I had the feeling that the framework was somewhat underdeveloped, anyway.

My previous investigations had led me to consider deploying an alternative C library within L4Re, with programs linking to this library instead of uClibc. C libraries generally come across as rather messy and incoherent things, accumulating lots of historical baggage as files are incorporated from different sources to support long-forgotten systems and architectures. The challenge was to find a library that could be conveniently adapted to accommodate a non-Unix-like system, with the emphasis on convenience precluding having to make changes to hundreds of files. Eventually, I chose Newlib because the breadth of its dependencies on the underlying system is rather narrow: a relatively small number of fundamental calls. In contrast, other C libraries assume a Unix-like system with countless, specialised system calls that would need to be reinterpreted and reframed in terms of my own library’s operations.

My previous effort had rather superficially demonstrated a proof of concept: linking programs to Newlib and performing fairly undemanding operations. This time round, I knew that my own framework had become more complicated, employed C++ in various places, and would create a lot of work if I were to decouple it from various L4Re packages, as I had done in my earlier proof of concept. I briefly considered and then rejected undertaking such extra work, instead deciding that I would simply dust off my modified Newlib sources, build my old test programs, and see which symbols were missing. I would then seek to reintroduce these symbols and hope that the existing L4Re code would be happy with my substitutions.

Supporting Threads

For the very simplest of programs, I was able to “stub” a few functions and get them to run. However, part of the sophistication of my framework in its current state is its use of threading to support various activities. For example, monitoring data streams from pipes and files involves a notification mechanism employing threads, and thus a dependency on the pthread library is introduced. Unfortunately, although Newlib does provide a similar pthread library to that featured in L4Re, it is not really done in a coherent fashion, and there is other pthread support present in Newlib that just adds to the confusion.

Initially, then, I decided to create “stub” implementations for the different functions used by various libraries in L4Re, like the standard C++ library whose concurrency facilities I use in my own code. I made a simple implementation of pthread_create, along with some support for mutexes. Running programs did exercise these functions and produce broadly expected results. Continuing along this path seemed like it might entail a lot of work, however, and in studying the existing pthread library in L4Re, I had noticed that although it resides within the “uclibc” package, it is somewhat decoupled from the C library itself.

Favouring laziness, I decided to see if I couldn’t make a somewhat independent package that might then be interfaced to Newlib. For the most part, this exercise involved introducing missing functions and lots of debugging, watching the initialisation of programs fail due to things like conflicts with capability allocation, perhaps due to something I am doing wrong, or perhaps exposing conditions that are fortuitously avoided in L4Re’s existing uClibc arrangement. Ultimately, I managed to get a program relying on threading to start, leaving me with the exercise of making sure that it was producing the expected output. This involved some double-checking of previous measures to allow programs using different C libraries to communicate certain kinds of structures without them misinterpreting the contents of those structures.

Further Work

There is plenty still to do in this effort. First of all, I need to rewrite the remaining test programs to use C library functions instead of client library functions, having done this for only a couple of them. Then, it would be nice to expand C library coverage to deal with other operations, particularly process creation since I spent quite some time getting that to work.

I need to review the way Newlib handles concurrency and determine what else I need to do to make everything work as it should in that regard. I am still using code from an older version of Newlib, so an update to a newer version might be sensible. In this latest round of C library evaluation, I briefly considered Picolibc which is derived from Newlib and other sources, but I didn’t fancy having to deal with its build system or to repackage the sources to work with the L4Re build system. I did much of the same with Newlib previously and, having worked through such annoyances, was largely able to focus on the actual code as opposed to the tooling.

Currently, I have been statically linking programs to Newlib, but I eventually want to dynamically link them. This does exercise different paths in the C and pthread libraries, but I also want to explore dynamic linking more broadly in my own environment, having already postponed such investigations from my work on getting programs to run. Introducing dynamic linking and shared libraries helps to reduce memory usage and increase the performance of a system when multiple programs need the same libraries.

There are also some reasonable arguments for making the existing L4Re pthread implementation more adaptable, consolidating my own changes to the code, and also for considering making or adopting other pthread implementations. Convenient support for multiple C library implementations, and for combining these with other libraries, would be desirable, too.

Much of the above has been a distraction from what I have been wanting to focus on, however. Had it been more apparent how to usefully extend uClibc, I might not have bothered looking at Newlib or other C libraries, and then I probably wouldn’t have looked into threading support. Although I have accumulated some knowledge in the process, and although some of that knowledge will eventually have proven useful, I cannot help feeling that L4Re, being a fairly mature product at this point and a considerable achievement, could be more readily extensible and accessible than it currently is.

Friday, 05 April 2024

Migrate legacy openldap to a docker container.

Migrate legacy openldap to a docker container.

Prologue

I maintain a couple of legacy EOL CentOS 6.x SOHO servers to different locations. Stability on those systems is unparalleled and is -mainly- the reason of keeping them in production, as they run almost a decade without a major issue.

But I need to do a modernization of these legacy systems. So I must prepare a migration plan. Initial goal was to migrate everything to ansible roles. Although, I’ve walked down this path a few times in the past, the result is not something desirable. A plethora of configuration files and custom scripts. Not easily maintainable for future me.

Current goal is to setup a minimal setup for the underlying operating system, that I can easily upgrade through it’s LTS versions and separate the services from it. Keep the configuration on a git repository and deploy docker containers via docker-compose.

In this blog post, I will document the openldap service. I had some is issues against bitnami/openldap docker container so the post is also a kind of documentation.

Preparation

Two different cases, in one I have the initial ldif files (without the data) and on the second node I only have the data in ldifs but not the initial schema. So, I need to create for both locations a combined ldif that will contain the schema and data.

And that took me more time that it should! I could not get the service running correctly and I experimented with ldap exports till I found something that worked against bitnami/openldap notes and environment variables.

ldapsearch command

In /root/.ldap_conf I keep the environment variables as Base, Bind and Admin Password (only root user can read them).

cat /usr/local/bin/lds #!/bin/bash

source /root/.ldap_conf

/usr/bin/ldapsearch

-o ldif-wrap=no

-H ldap://$HOST

-D $BIND

-b $BASE

-LLL -x

-w $PASS $*

sudo lds > /root/openldap_export.ldif

Bitnami/openldap

GitHub page of bitnami/openldap has extensive documentation and a lot of environment variables you need to setup, to run an openldap service. Unfortunately, it took me quite a while, in order to find the proper configuration to import ldif from my current openldap service.

Through the years bitnami has made a few changes in libopenldap.sh which produced a frustrated period for me to review the shell script and understand what I need to do.

I would like to explain it in simplest terms here and hopefully someone will find it easier to migrate their openldap.

TL;DR

The correct way:

Create local directories

mkdir -pv {ldif,openldap}Place your openldap_export.ldif to the local ldif directory, and start openldap service with:

docker compose up---

services:

openldap:

image: bitnami/openldap:2.6

container_name: openldap

env_file:

- path: ./ldap.env

volumes:

- ./openldap:/bitnami/openldap

- ./ldifs:/ldifs

ports:

- 1389:1389

restart: always

volumes:

data:

driver: local

driver_opts:

device: /storage/docker

Your environmental configuration file, should look like:

cat ldap.env LDAP_ADMIN_USERNAME="admin"

LDAP_ADMIN_PASSWORD="testtest"

LDAP_ROOT="dc=example,dc=org"

LDAP_ADMIN_DN="cn=admin,$ LDAP_ROOT"

LDAP_SKIP_DEFAULT_TREE=yes

Below we are going to analyze and get into details of bitnami/openldap docker container and process.

OpenLDAP Version in docker container images.

Bitnami/openldap docker containers -at the time of writing- represent the below OpenLDAP versions:

bitnami/openldap:2 -> OpenLDAP: slapd 2.4.58

bitnami/openldap:2.5 -> OpenLDAP: slapd 2.5.17

bitnami/openldap:2.6 -> OpenLDAP: slapd 2.6.7list images

docker images -a

REPOSITORY TAG IMAGE ID CREATED SIZE

bitnami/openldap 2.6 bf93eace348a 30 hours ago 160MB

bitnami/openldap 2.5 9128471b9c2c 2 days ago 160MB

bitnami/openldap 2 3c1b9242f419 2 years ago 151MB

Initial run without skipping default tree

As mentioned above the problem was with LDAP environment variables and LDAP_SKIP_DEFAULT_TREE was in the middle of those.

cat ldap.env LDAP_ADMIN_USERNAME="admin"

LDAP_ADMIN_PASSWORD="testtest"

LDAP_ROOT="dc=example,dc=org"

LDAP_ADMIN_DN="cn=admin,$ LDAP_ROOT"

LDAP_SKIP_DEFAULT_TREE=no

for testing: always empty ./openldap/ directory

docker compose up -dBy running ldapsearch (see above) the results are similar to below data

ldsdn: dc=example,dc=org

objectClass: dcObject

objectClass: organization

dc: example

o: example

dn: ou=users,dc=example,dc=org

objectClass: organizationalUnit

ou: users

dn: cn=user01,ou=users,dc=example,dc=org

cn: User1

cn: user01

sn: Bar1

objectClass: inetOrgPerson

objectClass: posixAccount

objectClass: shadowAccount

userPassword:: Yml0bmFtaTE=

uid: user01

uidNumber: 1000

gidNumber: 1000

homeDirectory: /home/user01

dn: cn=user02,ou=users,dc=example,dc=org

cn: User2

cn: user02

sn: Bar2

objectClass: inetOrgPerson

objectClass: posixAccount

objectClass: shadowAccount

userPassword:: Yml0bmFtaTI=

uid: user02

uidNumber: 1001

gidNumber: 1001

homeDirectory: /home/user02

dn: cn=readers,ou=users,dc=example,dc=org

cn: readers

objectClass: groupOfNames

member: cn=user01,ou=users,dc=example,dc=org

member: cn=user02,ou=users,dc=example,dc=org

so as you can see, they create some default users and groups.

Initial run with skipping default tree

Now, let’s skip creating the default users/groups.

cat ldap.env LDAP_ADMIN_USERNAME="admin"

LDAP_ADMIN_PASSWORD="testtest"

LDAP_ROOT="dc=example,dc=org"

LDAP_ADMIN_DN="cn=admin,$ LDAP_ROOT"

LDAP_SKIP_DEFAULT_TREE=yes

(always empty ./openldap/ directory )

docker compose up -dldapsearch now returns:

No such object (32)That puzzled me … a lot !

Conclusion

It does NOT matter if you place your ldif schema file and data and populate the LDAP variables with bitnami/openldap. Or use ANY other LDAP variable from bitnami/openldap reference manual.

The correct method is to SKIP default tree and place your export ldif to the local ldif directory. Nothing else worked.

Took me almost 4 days to figure it out and I had to read the libopenldap.sh.

That’s it !

Wednesday, 06 March 2024

SymPy: a powerful math library

SymPy is a lightweight symbolic mathematics library for Python under the 3-clause BSD license that can be used either as a library or in an interactive environment. It features symbolic expressions, solving equations, plotting and much more!

Creating and using functions

Creating a function is as simple as using variables and putting them into a mathematical expression:

|

|

Line 1 imports the x and y

Symbols

from the abc collection of Symbols, line 3 creates the f function which is

$2x^2 + 3y + 10$, and line 4 evaluates f with values 3 and 5 for x and y,

respectively.

SymPy exports a large list of mathematical functions for more complex expressions such logarithm, exponential, etc., and is able to analyze the fucntion’s characteristics over arbitrary intervals:

|

|

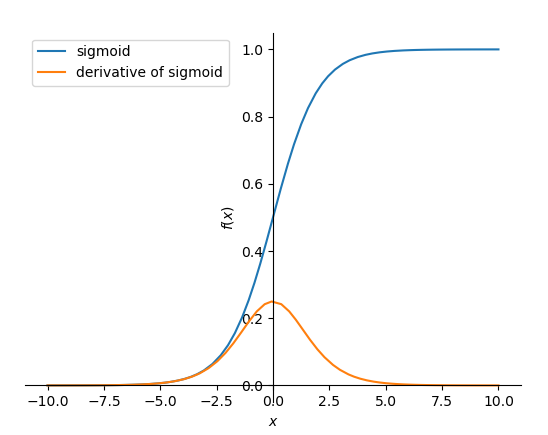

Plotting

SymPy is able to easily plot functions on a given interval:

|

|

Line 1 imports the

plot()

function, line 3 creates the f Function object from the sigmoid

expression $\frac{1}{1+\exp^{-x}}$, line 4 plots f and its derivative between

-5 and 5, lines 5-6 set the legends labels and lines 7 shows the created plot:

Sigmoid and its derivative.

In a few lines of code, the library allows one to create a mathematical function, do operations on it like computing its derivative and plot the result. Moreover, as SymPy uses Matplotlib under the hood by default, the plots are modifiable using the Matplotlib API.

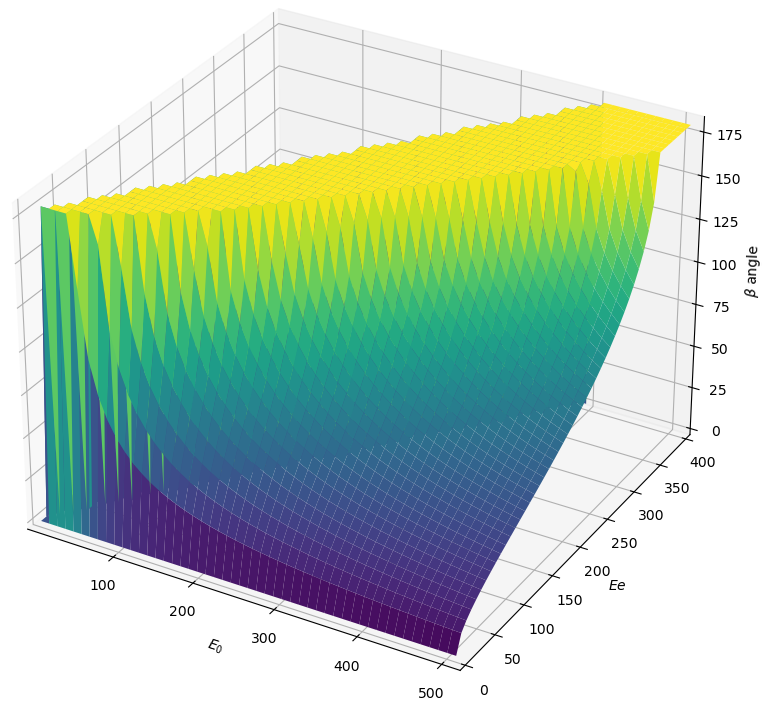

SymPy also supports 3D plots with the

plot3d()

function which helps understanding the relationship between two variables and an

outcome. The following code computes the energy of the recoiling electron after

Compton scattering depending on the initial photon energy and the scattering

angle (in degrees) using the Compton scattering

formula : $$\beta = \arccos

\left(1 - \frac{Ee * 511}{E0 * (E0 - Ee)}\right) * \frac{180}{3.14159}$$

|

|

Line 1 imports the plot3d function, line 3 creates the E0 (the initial

photon energy) and theta custom variables1, line 4 defines the beta

function which depends on the E0 and Ee variables, line 6 does the

plotting and defines the axes intervals, line 7 sets the axes’ labels and line 8

displays the following figure:

Compton angle depending on E0 and Ee.

Solving equations

SymPy also has an equation solver for algebraic equations and equations systems: SymPy considers the equations strings to be equal to zero for ease of use.

|

|

The equation solvers also works for boolean logic, where SymPy tells what should be the truth values of the variables to satisfy a boolean expression:

|

|

An unsatisfiable expression yields False.

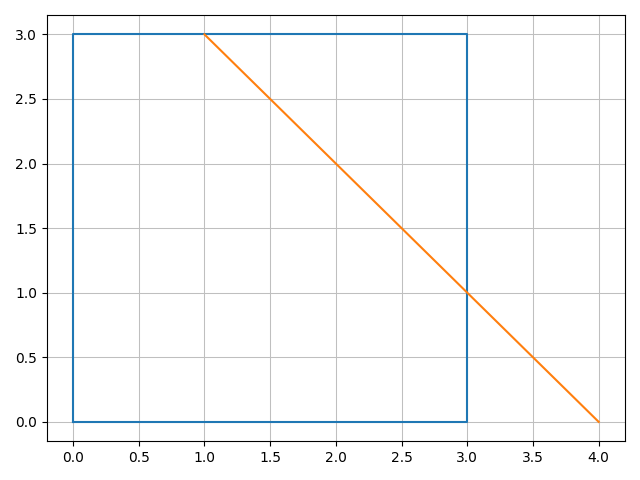

Geometry

SymPy also features a geometry module allowing to perform geometric operations such as Points, Segments and Polygons. It’s

|

|

Line 1 imports classes from the geometry module, Line 2 import the SymPy plotting backends library, which contains additional plotting features needed to plot geometric objects, line 3 and 4 create a polygon and a segment, respectively, line 6 plots the figure and line 7 returns the intersection points of the polygon and the segment. Line 6 produces the following figure:

Compton angle depending on E0 and Ee.

The SymPy library is thus easy to use and suitable for various applications. Because it’s Free Software, anyone can use, share, study and improve it for whatever purpose, just like mathematics.

-

We could use the

xandyvariables here but having custom variables names makes reading the code easier. ↩︎

Monday, 04 March 2024

A Tall Tale of Denied Glory

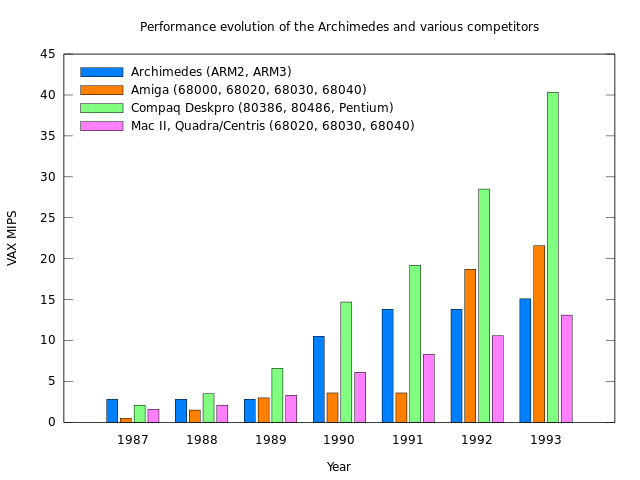

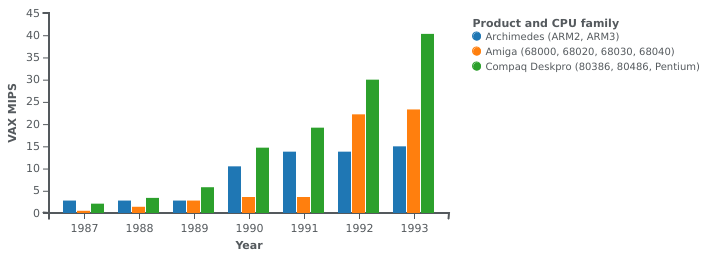

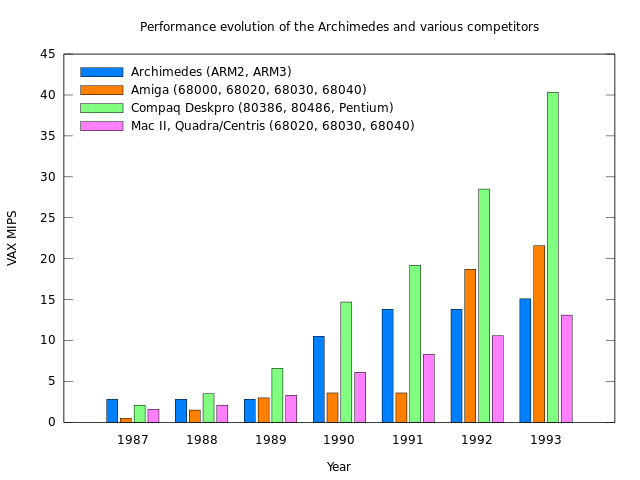

I seem to be spending too much time looking into obscure tales from computing history, but continuing an earlier tangent from a recent article, noting the performance of different computer systems at the end of the 1980s and the start of the 1990s, I found myself evaluating one of those Internet rumours that probably started to do the rounds about thirty years ago. We will get to that rumour – a tall tale, indeed – in a moment. But first, a chart that I posted in an earlier article:

Performance evolution of the Archimedes and various competitors

As this nice chart indicates, comparing processor performance in computers from Acorn, Apple, Commodore and Compaq, different processor families bestowed a competitive advantage on particular systems at various points in time. For a while, Acorn’s ARM2 processor gave Acorn’s Archimedes range the edge over much more expensive systems using the Intel 80386, showcased in Compaq’s top-of-the-line models, as well as offerings from Apple and Commodore, these relying on Motorola’s 68000 family. One can, in fact, claim that a comparison between ARM-based systems and 80386-based systems would have been unfair to Acorn: more similarly priced systems from PC-compatible vendors would have used the much slower 80286, making the impact of the ARM2 even more remarkable.

Something might be said about the evolution of these processor families, what happened after 1993, and the introduction of later products. Such topics are difficult to address adequately for a number of reasons, principally the absence of appropriate benchmark results and the evolution of benchmarking to more accurately reflect system performance. Acorn never published SPEC benchmark figures, nor did ARM (at the time, at least), and any given benchmark as an approximation to “real-world” computing activities inevitably drifts away from being an accurate approximation as computer system architecture evolves.

However, in another chart I made to cover Acorn’s Unix-based RISC iX workstations, we can consider another range of competitors and quite a different situation. (This chart also shows off the nice labelling support in gnuplot that wasn’t possible with the currently disabled MediaWiki graph extension.)

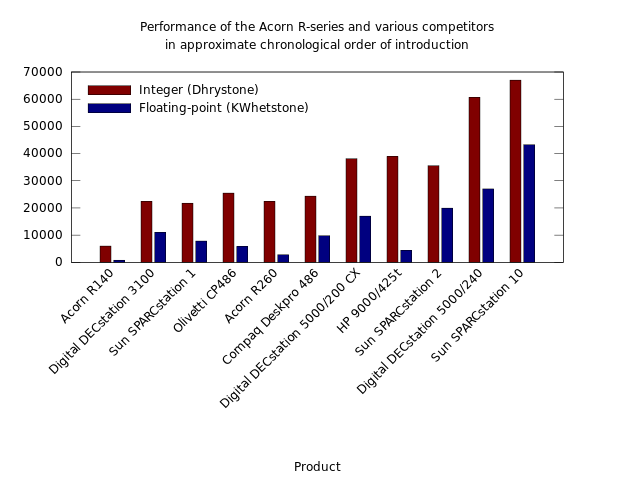

Performance of the Acorn R-series and various competitors in approximate chronological order of introduction: a chart produced by gnuplot and converted from SVG to PNG for Wikipedia usage.

Now, this chart only takes us from 1989 until 1992, which will not satisfy anyone wondering what happened next in the processor wars. But it shows the limits of Acorn’s ability to enter the lucrative Unix workstation market with a processor that was perceived to be rather fast in the world of personal computers. Acorn’s R140 used the same ARM2 processor introduced in the Archimedes range, but even at launch this workstation proved to be considerably slower than somewhat more expensive workstation models from Digital and Sun employing MIPS and SPARC processors respectively.

Fortunately for Acorn, adding a cache to the ARM2 (plus a few other things) to make the ARM3 unlocked a considerable boost in performance. Although the efficient utilisation of available memory bandwidth had apparently been a virtue for the ARM designers, coupling the processor to memory performance had put a severe limit on overall performance. Meanwhile, the designers of the MIPS and SPARC processor families had started out with a different perspective and had considered cache memory almost essential in the kind of computer architectures that would be using these processors.

Acorn didn’t make another Unix workstation after the R260, released in 1990, for reasons that could be explored in depth another time. One of them, however, was that ARM processor design had been spun out to a separate company, ARM Limited, and appeared to be stalling in terms of delivering performance improvements at the same rate as previously, or indeed at the same rate as other processor families. Acorn did introduce the ARM610 belatedly in 1994 in its Risc PC, which would have been more amenable to running Unix, but by then the company was arguably beginning the process of unravelling for another set of reasons to be explored another time.

So, That Tall Tale

It is against this backdrop of competitive considerations that I now bring you the tall tale to which I referred. Having been reminded of the Atari Transputer Workstation by a video about the Transputer – another fascinating topic and thus another rabbit hole to explore – I found myself investigating Atari’s other workstation product: a Unix workstation based on the Motorola 68030 known as the Atari TT030 or TT/X, augmenting the general Atari TT product with the Unix System V operating system.

On the chart above, a 68030-based system would sit at a similar performance level to Acorn’s R140, so ignoring aspirational sentiments about “high-end” performance and concentrating on a price of around $3000 (with a Unix licence probably adding to that figure), there were some hopes that Atari’s product would reach a broad audience:

As a UNIX platform, the affordable TT030 may leapfrog machines from IBM, Apple, NeXT, and Sun, as the best choice for mass installation of UNIX systems in these environments.

As it turned out, Atari released the TT without Unix in 1990 and only eventually shipped a Unix implementation in around 1992, discontinuing the endeavour not long afterwards. But the tall tale is not about Atari: it is about their rivals at Commodore and some bizarre claims that seem to have drifted around the Internet for thirty years.

Like Atari and Acorn, Commodore also had designs on the Unix workstation market. And like Atari, Commodore had a range of microcomputers, the Amiga series, based on the 68000 processor family. So, the natural progression for Commodore was to design a model of the Amiga to run Unix, eventually giving us the Amiga 3000UX, priced from around $5000, running an implementation of Unix System V Release 4 branded as “Amiga Unix”.

Reactions from the workstation market were initially enthusiastic but later somewhat tepid. Commodore’s product, although delivered in a much more timely fashion than Atari’s, will also have found itself sitting at a similar performance level to Acorn’s R140 but positioned chronologically amongst the group including Acorn’s much faster R260 and the 80486-based models. It goes without saying that Atari’s eventual product would have been surrounded by performance giants by the time customers could run Unix on it, demonstrating the need to bring products to market on time.

So what is this tall tale, then? Well, it revolves around this not entirely coherent remark, entered by some random person twenty-one years ago on the emerging resource known as Wikipedia:

The Amiga A3000UX model even got the attention of Sun Microsystems, but unfortunately Commodore did not jump at the A3000UX.

If you search the Web for this, including the Internet Archive, the most you will learn is that Sun Microsystems were supposedly interested in adopting the Amiga 3000UX as a low-cost workstation. But the basis of every report of this supposed interest always seems to involve “talk” about a “deal” and possibly “interest” from unspecified “people” at Sun Microsystems. And, of course, the lack of any eventual deal is often blamed on Commodore’s management and perennial villain of the Amiga scene…

There were talks of Sun Microsystems selling Amiga Unix machines (the prototype Amiga 3500) as a low-end Unix workstations under their brand, making Commodore their OEM manufacturer. This deal was let down by Commodore’s Mehdi Ali, not once but twice and finally Sun gave up their interest.

Of course, back in 2003, anything went on Wikipedia. People thought “I know this!” or “I heard something about this!”, clicked the edit link, and scrawled away, leaving people to tidy up the mess two decades later. So, I assume that this tall tale is just the usual enthusiast community phenomenon of believing that a favourite product could really have been a contender, that legitimacy could have been bestowed on their platform, and that their favourite company could have regained some of its faded glory. Similar things happened as Acorn went into decline, too.

Picking It All Apart

When such tales appeal to both intuition and even-handed consideration, they tend to retain a veneer of credibility: of being plausible and therefore possibly true. I cannot really say whether the tale is actually true, only that there is no credible evidence of it being true. However, it is still worth evaluating the details within such tales on their merits and determine whether the substance really sounds particularly likely at all.

So, why would Sun Microsystems be interested in a Commodore workstation product? Here, it helps to review Sun’s own product range during the 1980s, to note that Sun had based its original workstation on the Motorola 68000 and had eventually worked up the 68000 family to the 68030 in its Sun-3 products. Indeed, the final Sun-3 products were launched in 1989, not too long before the Amiga 3000UX came to market. But the crucial word in the previous sentence is “final”: Sun had adopted the SPARC processor family and had started introducing SPARC-based models two years previously. Like other workstation vendors, Sun had started to abandon Motorola’s processors, seeking better performance elsewhere.

A June 1989 review in Personal Workstation magazine is informative, featuring the 68030-based Sun 3/80 workstation alongside Sun’s SPARCstation 1. For diskless machines, the Sun 3/80 came in at around $6000 whereas the SPARCstation 1 came in at around $9000. For that extra $3000, the buyer was probably getting around four times the performance, and it was quite an incentive for Sun’s customers and developers to migrate to SPARC on that basis alone. But even for customers holding on to their older machines and wanting to augment their collection with some newer models, Sun was offering something not far off the “low-cost” price of an Amiga 3000UX with hardware that was probably more optimised for the role.

Sun will have supported customers using these Sun-3 models for as long as support for SunOS was available, eventually introducing Solaris which dropped support for the 68000 family architecture entirely. Just like other Unix hardware vendors, once a transition to various RISC architectures had been embarked upon, there was little enthusiasm for going back and retooling to support the Motorola architecture again. And, after years resisting, even Motorola was embracing RISC with its 88000 architecture, tempting companies like NeXT and Apple to consider trading up from the 68000 family: an adventure that deserves its own treatment, too.

So, under what circumstances would Sun have seriously considered adopting Commodore’s product? On the face of it, the potential compatibility sounds enticing, and Commodore will have undoubtedly asserted that they had experience at producing low-cost machines in volume, appealing to Sun’s estimate, expressed in the Personal Workstation review, that the customer base for a low-cost workstation would double for every $1000 drop in price. And surely Sun would have been eager to close the doors on manufacturing a product line that was going to be phased out sooner or later, so why not let Commodore keep making low-cost models to satisfy existing customers?

First of all, we might well doubt any claims to be able to produce workstations significantly cheaper than those already available. The Amiga 3000UX was, as noted, only $1000 or so cheaper than the Sun 3/80. Admittedly, it had a hard drive as standard, making the comparison slightly unfair, but then the Sun 3/80 was around already in 1989, meaning that to be fair to that product, we would need to see how far its pricing will have fallen by the time the Amiga 3000UX became available. Commodore certainly had experience in shipping large volumes of relatively inexpensive computers like the Amiga 500, but they were not shipping workstation-class machines in large quantities, and the eventual price of the Amiga 3000UX indicates that such arguments about volume do not automatically confer low cost onto more expensive products.

Even if we imagine that the Amiga 3000UX had been successfully cost-reduced and made more competitive, we then need to ask what benefits there would have been for the customer, for developers, and for Sun in selling such a product. It seems plausible to imagine customers with substantial investments in software that only ran on Sun’s older machines, who might have needed newer, compatible hardware to keep that software running. Perhaps, in such cases, the suppliers of such software were not interested or capable of porting the software to the SPARC processor family. Those customers might have kept buying machines to replace old ones or to increase the number of “seats” in their environment.

But then again, we could imagine that such customers, having multiple machines and presumably having them networked together, could have benefited from augmenting their old Motorola machines with new SPARC ones, potentially allowing the SPARC machines to run a suitable desktop environment and to use the old applications over the network. In such a scenario, the faster SPARC machines would have been far preferable as workstations, and with the emergence of the X Window System, a still lower-cost alternative would have been to acquire X terminals instead.

We might also question how many software developers would have been willing to abandon their users on an old architecture when it had been clear for some time that Sun would be transitioning to SPARC. Indeed, by producing versions of the same operating system for both architectures, one can argue that Sun was making it relatively straightforward for software vendors to prepare for future products and the eventual deprecation of their old products. Moreover, given the performance benefits of Sun’s newer hardware, developers might well have been eager to complete their own transition to SPARC and to entice customers to follow rapidly, if such enticement was even necessary.

Consequently, if there were customers stuck on Sun’s older hardware running applications that had been effectively abandoned, one could be left wondering what the scale of the commercial opportunity was in selling those customers more of the same. From a purely cynical perspective, given the idiosyncracies of Sun’s software platform from time to time, it is quite possible that such customers would have struggled to migrate to another 68000 family Unix platform. And even without such portability issues and with the chance of running binaries on a competing Unix, the departure of many workstation vendors to other architectures may have left relatively few appealing options. The most palatable outcome might have been to migrate to other applications instead and to then look at the hardware situation with fresh eyes.

And we keep needing to return to that matter of performance. A 68030-based machine was arguably unappealing, like 80386-based systems, clearing the bar for workstation computing but not by much. If the cost of such a machine could have been reduced to an absurdly low price point then one could have argued that it might have provided an accessible entry point for users into a vendor’s “ecosystem”. Indeed, I think that companies like Commodore and Acorn should have put Unix-like technology in their low-end products, harmonising them with higher-end products actually running Unix, and having their customers gradually migrate as more powerful computers became cheaper.

But for workstations running what one commentator called “wedding-cake configurations” of the X Window System, graphical user interface toolkits, and applications, processors like the 68030, 80386 and ARM2 were going to provide a disappointing experience whatever the price. Meanwhile, Sun’s existing workstations were a mature product with established peripherals and accessories. Any cost-reduced workstation would have been something distinct from those existing products, impaired in performance terms and yet unable to make use of things like graphics accelerators which might have made the experience tolerable.

That then raises the question of the availability of the 68040. Could Commodore have boosted the Amiga 3000UX with that processor, bringing it up to speed with the likes of the ARM3-based R260 and 80486-based products, along with the venerable MIPS R2000 and early SPARC processors? Here, we can certainly answer in the affirmative, but then we must ask what this would have done to the price. The 68040 was a new product, arriving during 1990, and although competitively priced relative to the SPARC and 80486, it was still quoted at around $800 per unit, featuring in Apple’s Macintosh range in models that initially, in 1991, cost over $5000. Such a cost increase would have made it hard to drive down the system price.

In the chart above, the HP 9000/425t represents possibly the peak of 68040 workstation performance – “a formidable entry-level system” – costing upwards of $9000. But as workstation performance progressed, represented by new generations of DECstations and SPARCstations, the 68040 stalled, unable to be clocked significantly faster or otherwise see its performance scaled up. Prominent users such as Apple jumped ship and adopted PowerPC along with Motorola themselves! Motorola returned to the architecture after abandoning further development of the 88000 architecture, delivering the 68060 before finally consigning the architecture to the embedded realm.

In the end, even if a competitively priced and competitively performing workstation had been deliverable by Commodore, would it have been in Sun’s interests to sell it? Compatibility with older software might have demanded the continued development of SunOS and the extension of support for older software technologies. SunOS might have needed porting to Commodore’s hardware, or if Sun were content to allow Commodore to add any necessary provision to its own Unix implementation, then porting of those special Sun technologies would have been required. One can question whether the customer experience would have been satisfactory in either case. And for Sun, the burden of prolonging the lifespan of products that were no longer the focus of the company might have made the exercise rather unattractive.

Companies can always choose for themselves how much support they might extend to their different ranges of products. Hewlett-Packard maintained several lines of workstation products and continued to maintain a line of 68030 and 68040 workstations even after introducing their own PA-RISC processor architecture. After acquiring Apollo Computer, who had also begun to transition to their own RISC architecture from the 68000 family, HP arguably had an obligation to Apollo’s customers and thus renewed their commitment to the Motorola architecture, particularly since Apollo’s own RISC architecture, PRISM, was shelved by HP in favour of PA-RISC.

It is perhaps in the adoption of Sun technology that we might establish the essence of this tale. Amiga Unix was provided with Sun’s OPEN LOOK graphical user interface, and this might have given people reason to believe that there was some kind of deeper alliance. In fact, the alliance was really between Sun and AT&T, attempting to define Unix standards and enlisting the support of Unix suppliers. In seeking to adhere most closely to what could be regarded as traditional Unix – that defined by its originator, AT&T – Commodore may well have been picking technologies that also happened to be developed by Sun.

This tale rests on the assumption that Sun was not able to drive down the prices of its own workstations and that Commodore was needed to lead the way. Yet workstation prices were already being driven down by competition. Already by May 1990, Sun had announced the diskless SPARCstation SPC at the magic $5000 price point, although its lowest-cost colour workstation was reportedly the SPARCstation IPC at a much more substantial $10000. Nevertheless, its competitors were quite able to demonstrate colour workstations at reasonable prices, and eventually Sun followed their lead. Meanwhile, the Amiga 3000UX cost almost $8000 when coupled with a colour monitor.

With such talk of commodity hardware, it must not be forgotten that Sun was not without other options. For example, the company had already delivered SunOS on the Sun386i workstation in 1988. Although rather expensive, costing $10000, and not exactly a generic PC clone, it did support PC architecture standards. This arguably showed the way if the company were to target a genuine commodity hardware platform, and eventually Sun followed this path when making its Solaris operating system available for the Intel x86 architecture. But had Sun had a desperate urge to target commodity hardware back in 1990, partnering with a PC clone manufacturer would have been a more viable option than repurposing an Amiga model. That clone manufacturer could have been Commodore, too, but other choices would have been more convincing.

Conclusions and Reflections

What can we make of all of this? An idle assertion with a veneer of plausibility and a hint of glory denied through the notoriously poor business practices of the usual suspects. Well, we can obviously see that nothing is ever as simple as it might seem, particularly if we indulge every last argument and pursue every last avenue of consideration. And yet, the matter of Commodore making a Unix workstation and Sun Microsystems being “interested in rebadging the A3000UX” might be as simple as imagining a rather short meeting where Commodore representatives present this opportunity and Sun’s representatives firmly but politely respond that the door has been closed on a product range not long for retirement. Thanks but no thanks. The industry has moved on. Did you not get that memo?